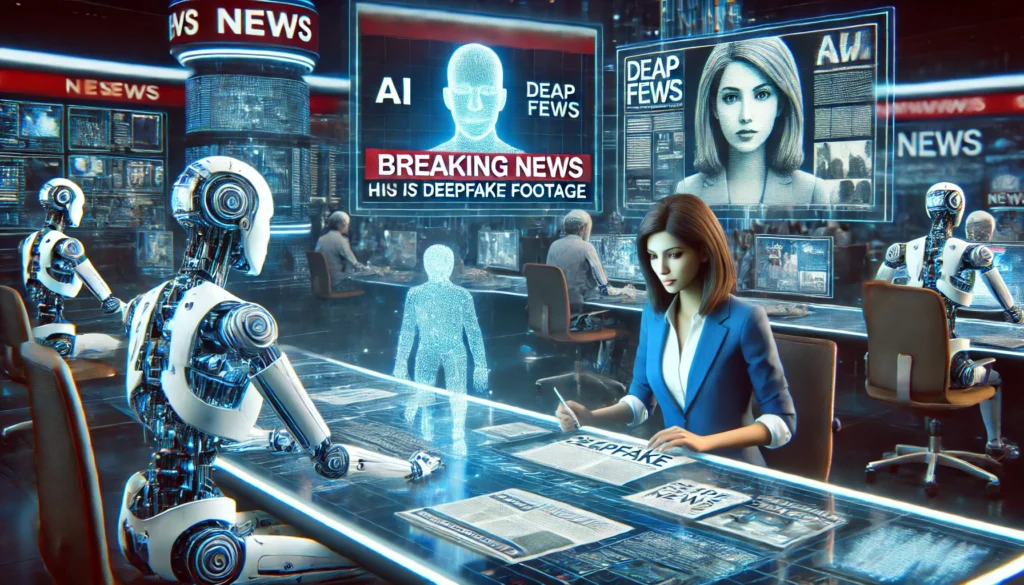

It’s 2025, and AI is everywhere—writing your emails, recommending your next Netflix binge, and now, it’s even creating your news. AI-generated news is no longer a future prediction; it’s here, and it’s changing the way we consume information. But is this a revolution or a disaster waiting to happen? Let’s dive into the impact of AI on journalism and whether it’s delivering news or just serving up a deepfake headline.

The Rise of AI-Generated News: It’s Real, People

AI-generated news isn’t science fiction anymore; it’s happening. Thanks to automated reporting tools, news agencies are now using AI to churn out stories faster than any human journalist could. From sports scores to stock market updates, AI is covering it all.

How? AI can analyze data in real time, recognize patterns, and quickly spit out readable content. The biggest advantage? Speed. Breaking news that would take hours for a journalist to research and write can now be done in minutes. Think about it—AI never needs a coffee break, never calls in sick, and never misses a deadline.

But there’s a catch. The consequences rise as AI expands its coverage to include more important subjects including political events along with natural disasters. The handling of critical issues such as corruption along with human rights matters requires human involvement rather than depending on algorithmic practices. The absence of human supervision threatens our ability to verify factually accurate news presentations. AI-generated opinion pieces completely lack important details in their writing procedures.

Automated Reporting: The Good, the Bad, and the Ugly

Let’s get real: automated reporting systems serve as both a prosperous remedy and a problematic procedure. The automation system transforms journalistic work environments by handling basic report-generation jobs. Journalists now spend their time on investigative work after automation executes non-commentary reports such as financial updates and weather broadcasting alongside sports scores.

The good news: Automated reporting means faster access to information. It keeps us informed in real-time, especially when major events are happening. Newsrooms can also generate a ton of content without manpower. AI can create thousands of articles based on data that would take human writers forever.

The bad news? It’s still AI. Even with its ability to analyze data, AI can’t understand context, culture, or irony. Can it really explain why a politician’s actions are significant in a nuanced way? Not so much. It’s like getting a weather forecast but missing the part about the wind chill.

And then there’s the ugly side: AI-written articles can be used to manipulate facts, spread misinformation, or even create deepfake journalism. Remember those AI-generated videos where celebrities say things they never actually said? Imagine that happening with news articles. A well-timed AI article can spread fake news faster than you can say “reliable sources.”

Deepfake Journalism: The Dark Side of AI Reporting

Now let’s talk about the really shady stuff: deepfake journalism. This is where things get… spooky. AI can create realistic-looking text, video, and even audio that seems to be from a legitimate source. Sounds harmless, right? Until you realize how easily fake news can be generated, and the lines between real and fake start to blur.

For instance, AI can be used to create fake quotes, completely fabricated stories, or even deepfake videos of political figures saying things they never said. It’s like the wild west of misinformation—and there are no laws protecting us from it. Deepfake journalism could be used to sway elections, ruin careers, or just stir up chaos.

So, how do we deal with it? The answer is tough. Fact-checking AI-generated content isn’t as easy as it sounds. Media outlets and social platforms need to create stricter regulations and advanced AI detection tools to help spot fakes before they go viral. But let’s be honest—how many times have we seen a tweet with a “breaking news” headline only for it to be debunked hours later?

The Human Element: Why We Still Need Journalists

While AI may be able to cover the basics, it can’t replace human journalists—at least not for the important stuff. Journalists are there to provide insight, analysis, and context. They’re the ones who ask the tough questions, follow up on leads, and dig deep to uncover the truth. AI just doesn’t have that level of curiosity or the ability to challenge the status quo.

AI might help with speed and efficiency, but it’s the journalists who set the agenda and investigate. A robot might tell you the facts, but only a human can tell you why those facts matter. AI lacks the empathy and understanding required for nuanced reporting. And don’t forget: real journalism is built on trust—and you can’t have trust without a human touch.

The Future of AI in Journalism: A Partnership, Not a Takeover

So, is AI going to completely take over journalism? Not likely. Instead, it’ll become a tool that complements human reporting. Just like the camera didn’t replace painters or the computer didn’t replace writers, AI will be part of the journalistic toolbox.

What to expect in the future: AI will continue to handle repetitive tasks—making journalists faster and more efficient. But for anything that requires critical thinking, deep analysis, or emotional intelligence, humans will still be in the driver’s seat. AI is the assistant; humans are the ones who make it work.

Final Thoughts: A Brave New World of News

AI in journalism is a double-edged sword. It’s pushing the boundaries of speed and efficiency but also raising serious questions about authenticity, trust, and manipulation. As the technology improves, it’s up to media outlets, tech companies, and, ultimately, us, the consumers, to make sure it’s used responsibly.

So, the next time you see a headline that seems too good to be true, ask yourself: Is it news—or is it a deepfake?